Senses Pack for Behavior Designer Pro

The Senses Pack for Behavior Designer Pro provides a comprehensive set of sensors and tasks for creating intelligent AI behaviors based on environmental perception. The Senses Pack has the following concepts:

Sense

- Visual information (Visibility, Luminance).

- Auditory information (Sound).

- Environmental conditions (Temperature, Surface type).

- Spatial awareness (Distance, Tracing).

Emitter

- Sound sources that can be heard by the Sound sensor.

- Temperature sources that can be detected by the Temperature sensor.

- Light sources that affect the Luminance sensor.

- Surface properties that can be detected by the Surface sensor.

Task

- Check if specific conditions are met (WithinRange, CanDetectObject).

- Query sensor data (GetSensorAmount).

- Execute behaviors based on sensor input (FollowTraceTrail).

- Combine multiple sensor inputs to make complex decisions

- Object: Allows sensors to detect a specific GameObject. This mode is ideal for tracking individual targets or objects of interest.

- Object Array: Enables sensors to detect multiple GameObjects simultaneously. This mode is useful for scenarios where an agent needs to be aware of several objects at once, such as tracking multiple targets or monitoring a group of items.

- Tag: Allows sensors to detect all GameObjects with a specific tag. This mode is particularly useful for detecting objects of a certain type or category, such as all enemies, collectibles, or interactive objects. Note: for performance reasons this detection mode is not recommended to be used in production.

- LayerMask: Enables sensors to detect objects on specific layers. This mode is essential for filtering detection based on object categories defined by Unity’s layer system.

Different objects can be detected based on physics casts:

- Raycast: Uses a single ray to detect objects in a straight line, ideal for precise line-of-sight checks.

- Sphere Cast: Uses a spherical cast to detect objects in a volume, useful for detecting objects in a specific radius.

- Circle Cast: Uses a circular cast for 2D detection, perfect for 2D games and side-scrollers.

- Capsule Cast: Uses a capsule-shaped cast to detect objects, great for character-sized detection areas.

- Box Cast: Uses a box-shaped cast to detect objects, useful for detecting objects in a rectangular area.

Included Sensors

Distance

Luminance

Surface

Sound

Temperature

Tracer

Visibility

- Red: The target is too far away from the agent.

- Magenta: Target is outside the horizontal field of view.

- Cyan: Target is outside the vertical field of view.

- Yellow: Another object is blocking the target.

- Green: The target can be seen and is within range.

Included Tasks

Can Detect Object

Can Detect Surface

Follow Trace Trail

Get Sensor Amount

Within Range

Task Setup

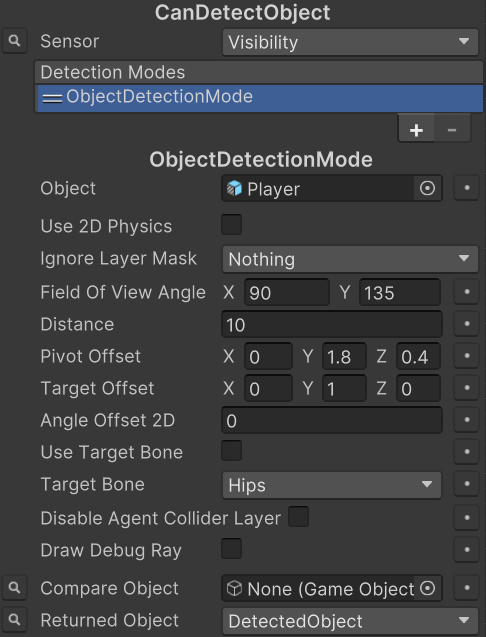

- Add the desired task. For this example we are going to use the Can Detect Object task.

- Select the Sensor type.

- Specify how the Sensor should detect the emitter. In the screenshot below we’ve selected the Visibility Sensor with the Object Detection Mode.

The Follow Trail task uses a Movement Pack workflow in order to detect the trace.

Emitter Setup

The following emitters have setup required within the scene:

Luminance

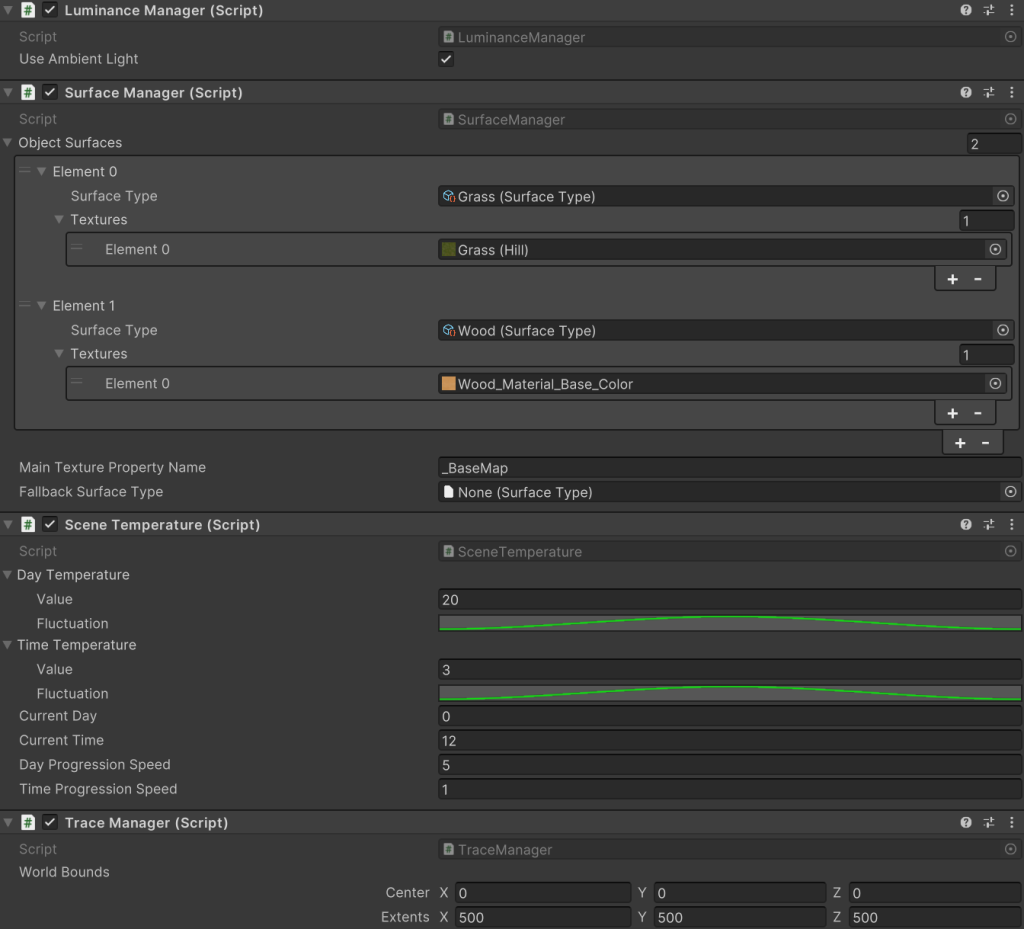

Each light source must have the Luminance Emitter component added to it in order for the light to be considered part of the calculation. The Luminance Manager is a singleton component that will be added to the scene automatically if it isn’t already added. This manager allows you to specify if ambient light should be used in the calculation.

Surface

New Surface Types can be created by right clicking within the project tab and selecting Create -> Opsive -> Behavior Designer Pro -> Senses Pack -> Surface Type. The singleton Surface Manager component can be used to specify a corresponding Surface Type per texture. Alternatively the Surface Identifier component can be added to a collider which allows for manual Surface Type specification.

Temperature

The Temperature Volume should be used to specify a temperature within the attached trigger. On this component the temperature can be Absolute or Relative. Relative temperatures are added to the base Scene Temperature. The Scene Temperature is a singleton component that allows you to specify fluctuating temperatures based on the time and day.

Trace

The Trace Manager is a singleton component that specifies the maximum world bounds. If this component is selected while the game is playing you’ll see a visualization of the traces that have been added along with the bounding octree node. Individual traces can be added with the Trace Emitter component, as is done for concepts such as blood trails. The Trace Emitter can also be used for scent with a specified Dissipation Time.